Ronghang Hu, Anna Rohrbach, Trevor Darrell, Kate Saenko

Abstract

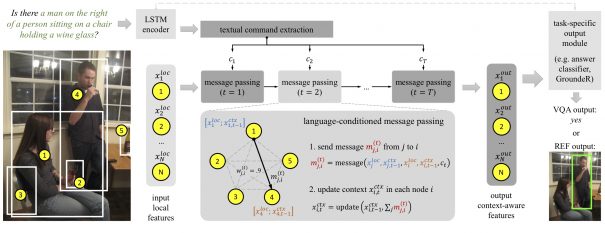

Solving grounded language tasks often requires reasoning about relationships between objects in the context of a given task. For example, to answer the question "What color is the mug on the plate?" we must check the color of the specific mug that satisfies the "on" relationship with respect to the plate. Recent work has proposed various methods capable of complex relational reasoning. However, most of their power is in the inference structure, while the scene is represented with simple local appearance features. In this paper, we take an alternate approach and build contextualized representations for objects in a visual scene to support relational reasoning. We propose a general framework of Language-Conditioned Graph Networks (LCGN), where each node represents an object, and is described by a context-aware representation from related objects through iterative message passing conditioned on the textual input. E.g., conditioning on the "on" relationship to the plate, the object "mug" gathers messages from the object "plate" to update its representation to "mug on the plate", which can be easily consumed by a simple classifier for answer prediction. We experimentally show that our LCGN approach effectively supports relational reasoning and improves performance across several tasks and datasets.

Publications

- R. Hu, A. Rohrbach, T. Darrell, K. Saenko, Language-Conditioned Graph Networks for Relational Reasoning. in ICCV, 2019

(PDF)

@inproceedings{hu2019language,

title={Language-Conditioned Graph Networks for Relational Reasoning},

author={Hu, Ronghang and Rohrbach, Anna and Darrell, Trevor and Saenko, Kate},

booktitle={Proceedings of the IEEE International Conference on Computer Vision (ICCV)},

year={2019}

}

Code

- Code (available in both TensorFlow and PyTorch) available at here.

- We also release our simple but well-performing "single-hop" baseline for the GQA dataset in a standalone repo. This "single-hop" model can serve as a basis for developing more complicated models.